The compact powerhouse for computer vision

As an alternative to GigE Vision Cameras

Edge AI can have a transformative role in resource management and environmental stewardship. It is – in the words of Fabio Violante, CEO of Arduino – “a crucial technology in this world of finite resources. It allows us to monitor and optimize consumption in real time: so the use of electricity or water, for example, can be minimized not just for today, but for the future. Manufacturing, agriculture and logistics can reduce their impact, with huge potential for cost savings as well as lowering our carbon footprint.”

In addition to optimizing resource and energy use, edge AI-powered systems can lead to significant cost savings by foreseeing equipment failures, through what is known as predictive maintenance. Arduino® products such as the Opta, Portenta Machine Control and Portenta H7 can all be used by enterprises in any industry to reap the benefits of edge AI.

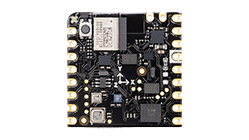

In this article though we are going to focus on the potential for using the Nicla family of intelligent sensors: in particular the Nicla Vision, which can be deployed to detect and identify visual anomalies for use in predictive maintenance.

Pros and cons of machine learning to the edge with microcontrollers

AI encompasses a wide spectrum of technologies including machine learning as well as natural language processing, robotics, and more. Machine learning (ML) on powerful computers has been around for a while, but is rather new territory on microcontrollers.

On the one hand microcontrollers can run at very low power on batteries for a long time. You could even put the processor to sleep and only wake it up when the camera or the on-board proximity sensor registers activity and then run more power-consuming tasks to analyze input data. On the other hand ML models on a microcontroller can run without internet connection as they don’t need to exchange data with the Cloud, thereby facilitating real-time applications. In addition, this means that you can install distributed ML solutions in places where there is no internet connection, reaping all the benefits of edge computing. And finally, processing data locally means that everything stays on the device ensuring data privacy.

How machine learning has propelled computer vision forward

Computer vision allows machines to understand visual information and act upon it, mirroring how the human eye works to provide data to the brain. Integrating AI algorithms into computer vision projects significantly enhances any system’s capacity to perceive, interpret, and respond to visual cues.

For example, the main factor holding back visual anomaly detection in the past was that it was really hard to write a control loop that distinguishes what is being seen, especially when objects have a similar appearance. In other words, abilities that are obvious to the human optical system (e.g. identifying a horse or a donkey as different animal species) were complex to replicate. Machine learning changes that: provide a computer with enough labeled data, and it will figure out an algorithm. In machine learning with sensor data, a control loop is implemented by the means of a neural network (in affect writing it for us)

To train a ML model to classify an image, we need to feed it with image data of the object we are interested in identifying. The training process hinges on a concept called supervised learning: this means that we train the model with known data, and tell it while it’s “practicing” its predictions if they are correct or not. This is similar to what happens when you tell a toddler who is pointing at a donkey saying “horse” and you tell them that it’s actually a donkey. The next few times they see a donkey they may still get it wrong, but over time under your supervision they will learn to correctly identify a horse and a donkey. Conceptually, that’s also how a ML model learns, and becomes able to carry out simple image classification by answering questions such as “Do I see a donkey?” with Yes or No.

FOMO, or how object detection is enabled on microcontrollers

When implementing computer vision projects on embedded devices, two of the most typical applications are image classification and object detection tasks.

Microcontrollers might not be able to run ML models to process high-resolution images at high frame rates, but there are some interesting aspects that enable them to be used for computer vision. By using a platform like Edge Impulse®, it is possible to simplify the process of creating machine learning models and take computer vision to the next level by enabling object detection on microcontrollers. Using the earlier donkey example, object detection can simply be put as “Do I see a donkey and where in the frame are they, or even how many donkeys do I see?”.

Using what Edge Impulse have catchily referred to as FOMO (Faster Objects, More Objects) it is now possible to run object counting on the smallest of devices. FOMO is a completely novel ML architecture for object detection, object tracking and object counting. It can be deployed on any Arm®-based Arduino with at least a Cortex®-M4F. On the Nicla Vision it can run at up to 30 fps (frames per second), tracking moving objects within the frame in near real-time.

To emphasize how using the Edge Impulse platform facilitates computer vision on the smallest of devices, image classification can operate on devices with a minimum of 50 kB memory, and object detection that involves complex images, video analysis and object tracking can run on devices with as low as 256 kB of memory.

Given the tiny form factor of the Nicla Vision – basically no larger than a postage stamp (22.86 x 22.86 mm) – it is really powerful what can be done: it operates a 96 x 96 pixel image with a 12 x 12 heat map that can detect up to 144 different classifiers. The tiny size means it can physically fit into most scenarios and because it can be configured to require very little energy, it can be powered by a battery for standalone applications.

As mentioned, memory is a crucial factor for embedded machine learning and it is the MCU SRAM that is used with machine learning inferences. Nicla Vision’s MCU, the STM32H747 is equipped with 1 MB, shared by both cores. This MCU also has incorporated 2 MB of flash, mainly for code storage and 16 MB of QSPI flash which allows to even embed larger machine learning models.

All of this makes Nicla Vision the ideal solution to develop or prototype with on-device image processing and machine vision at the edge, for asset tracking, object recognition, predictive maintenance and more.

Insightful examples of applications with Nicla Vision

So, what can you accomplish when using computer vision on the Nicla Vision?

Automate anything. Using computer vision on the Nicla Vision means you can literally automate anything: check every product is labeled before it leaves the production line; unlock doors only for authorized personnel, and only if they are wearing PPE correctly; use AI to train it to regularly check analog meters and beam readings to the Cloud; teach it to recognize thirsty crops and automatically turn the irrigation on when needed. Anytime you need to act or make a decision depending on what you see, let Nicla Vision watch, decide and act for you. You can also attach sophisticated sensors to the board and run machine learning models to process data that comes from sources other than the camera.

Allow machines to see what you need. Interact with kiosks with simple gestures, create immersive experiences, work with cobots at your side. Nicla Vision allows computers and smart devices to see you, recognize you, understand your movements and make your life easier, safer, more efficient, better.

Keep an eye out for events otherwise out of your range. Let Nicla Vision be your eyes: detecting animals on the other side of the farm, letting you answer your doorbell from the beach, constantly checking on the vibrations or wear of your industrial machinery. It’s your always-on, always precise lookout, anywhere you need it to be.

Open source makes it simple

Nicla Vision is part of Arduino’s complete ecosystem of hardware products, software solutions and cloud services, offering versatile and modular solutions for applications in any possible industry. The company’s signature open-source approach translates into a huge range of benefits for business: license fees and vendor lock-in are obliterated; NREs and labor costs fall; extensive documentation and prompt support are always at hand. All in all, the overarching concept that informs user experience is simplicity.

“Simplicity is the key to success. In the tech world, a solution is only as successful as it is widely accepted, adopted and applied — and not everyone can be an expert. You don’t have to know how electricity works to turn on the lights, how an engine is built to drive a car, or how large language models were developed to write a ChatGPT prompt: that plays a huge part in the popularity of these tools,” Violante adds. “That’s why, at Arduino, we make it our mission to democratize technologies like edge AI — providing simple interfaces, off-the-shelf hardware, readily available software libraries, free tools, shared knowledge, and everything else we can think of. We believe edge AI today can become an accessible, even easy-to-use option, and that more and more people across all industries, in companies of all sizes, will be able to leverage this innovation to solve problems, create value, and grow.”

To find out more about how you can leverage computer vision using the Arduino Nicla Vision and Edge Impulse, start from the useful tutorial here.

Conclusion

Visit our website to find out more about Arduino Nicla vision sensor and the Portenta family, the compact powerhouse for computer vision. Click here

Download article in PDF