Precise machine control using voice command and edge computing

Imagine a factory floor where a worker, busy lubricating the gearboxes with grease-soiled hands, says, 'Turn the conveyor off '

Precise machine control using voice command and edge computing

Imagine a factory floor where a worker, busy lubricating the gearboxes with grease-soiled hands, says, 'Turn the conveyor off '

The machine responds instantly with pinpoint precision. Although it may appear to be science fiction, it is a reality. The smart factories of today are driven by a combination of artificial intelligence, voice commands, and edge computing, offering unprecedented precision, flexibility, and accessibility.

Voice command technology, powered by natural language processing (NLP) and speech recognition, allows operators to issue commands for tasks like conveyer operations, opening or closing control valves and dampers, etc., without complex interfaces or manual inputs. These technologies, when combined with edge computing, ensure low latency, enhanced security, and scalability. Edge computing processes data locally for real-time responsiveness, making it ideal for industrial and experimental applications. This article explores how AI-driven voice commands and edge computing are reshaping industrial automation, reducing downtime, and improving efficiency with available resources from Farnell Electronics.

Voice command technology is revolutionising industrial automation by enhancing precision, efficiency, and accessibility. With the help of AI, particularly natural language processing (NLP) and speech recognition, voice user interfaces (VUIs) allow operators to control complex machinery with simple spoken instructions, reducing dependence on complex manual interfaces. This technology, analogous to 'digital assistant' for machines, helps transform factory floors and streamline factory operations. At the core of voice command systems are advanced AI algorithms that power VUIs to interpret spoken commands with remarkable accuracy, typically achieving 90–95% recognition rates. NLP algorithms, such as those based on recurrent neural networks (RNNs) and transformer models, analyse speech patterns to understand context and intent, even for complex instructions like 'set conveyor speed to 10 meters per minute.'

Figure 1 shows the application of voice command execution in the seamless operation of the plant under real-world conditions.

Figure 1: Voice recognition in industrial control application

Speech recognition systems enable precise control of industrial equipment as they translating human speech into machine-executable commands in real time. These systems are often built on hidden Markov models or deep learning and convert audible data into actionable commands.

However, despite such advancements, several challenges come to the fore when implementing robust voice control in industrial settings. Industrial environments are by nature noisy, with background sounds from machinery, alarms, and human activity posing significant obstacles to voice recognition systems. Additionally, variations in accents, speed of speech, and pronunciation can affect accuracy.

Modern AI algorithms effectively address these hurdles. Techniques such as advanced noise cancellation and suppression algorithms, often employing multiple microphones or beamforming technology, can isolate the operator's voice from ambient noise. AI models are now trained on much larger and more diverse datasets for accent and dialect variation. Also, on-device or edge-based machine learning allows for speaker adaptation and environment-specific model tuning, where the system learns its users' unique nuances and operational soundscape over time, progressively improving its accuracy and reliability.

The applications of this refined voice command technology in industrial automation are vast and enhance both precision and operational efficiency:

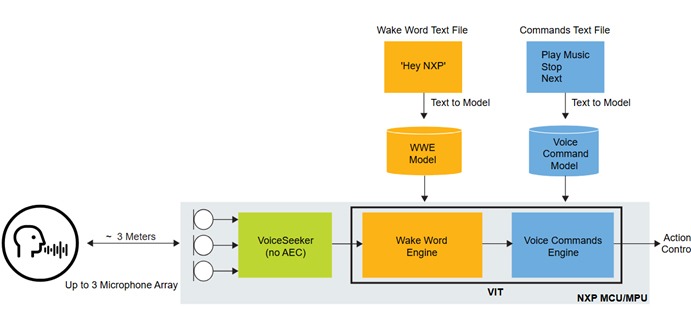

Voice control-based industrial automation technology consists of the following important stages (as represented in figure 2), each responsible for converting voice commands into machine actions:

Figure 2: Building blocks of voice control-based industrial automation

Conventional voice-command systems often rely on cloud-based processing, which introduces latency due to data transmission and server response times. In contrast, edge computing enhances the precision of voice command-based industrial automation by enabling real-time processing, reduced latency, and enhanced security. It helps process voice commands locally and minimises reliance on cloud-based systems, providing faster response times and robust performance critical for industrial applications. For example, a worker may issue a voice command to halt a conveyor belt in a noisy factory environment. The command is interpreted and executed milliseconds with edge processing, ensuring safety and operational continuity. This local processing also enhances privacy by keeping sensitive voice data and control commands on-premises, protecting proprietary processes from external threats.

While local data processing is advantageous, it is often limited to basic keywords and commands that require precise wording or phrasing. For example, turning on a blower fan may require saying the exact phrase 'Hello, XXX (wake word), blower fan on (voice command)' without any variation.

However, combining cloud-based and hybrid systems (as shown in figure 3) gives the advantage of running extremely complex algorithms and natural language processing (NLP) models. Thus, when turning on a blower fan, any combination of words can be used, and the system will still understand the context of what is being asked, such as 'Temperature has exceeded the limit; kindly turn on the blower fan.'

Figure 3: Edge computing-based voice command system

Controlling and operating machines using voice command is no more a futuristic vision- it is now a practical reality. Factories can now dynamically adapt to evolving production demands. The growing demand for precision and flexibility in industrial automation underscores the significance of these advancements. Industries achieve unprecedented levels of control by integrating AI-driven voice command control with real-time processing using edge computing. Farnell Electronics provides associated hardware solutions for precise machine control, making voice a universally understood language on the factory floor.